In a previous article, we spoke of Tractors and Genies, using them as metaphors for the power and unpredictability of generative AI. We noted how tools that feel like magic also demand new skills, new disciplines, and new ways of working. This new piece is a continuation of that story. If the first article was about realizing that the field has changed, that we no longer plough by hand, this one is about the daily practice of driving those tractors, and the routines we’re developing to make sense of the genie’s gifts.

Over the past couple of years, we have all been experimenting with generative AI in one way or another. Some of us used it inside projects, others explored it on the side, and in many cases the lines blurred. The experiments were valuable, but they also left us with a fragmented picture: everyone had stories to share, yet it was difficult to grasp the overall state of adoption.

This summer we decided to step back and gather a clearer view. We ran a set of internal interviews involving front-end, back-end, DevOps, designers, and product/delivery professionals. The purpose was not to produce an academic paper. It was to capture a snapshot of lived experience: how GenAI is being used day to day, what practices are proving effective, and where the pain points are.

Here we focus specifically on how GenAI is being adopted within the digital solutions development process. The use of GenAI as an architectural component in products and applications that require it will be the subject of separate articles. What follows is therefore more about our own internal usage and evolving approach. The results highlight common themes, recurring tensions, and open questions that will shape how we evolve our way of working.

One of the clearest findings is that GenAI has transformed the pace of prototyping, while production work still requires a level of rigor that current tools struggle to sustain.

Tools like Lovable, often used for “vibe coding,” have become popular because they enable incredibly fast iteration. Colleagues across roles describe how prototypes that once required a week can now be assembled in two days, giving teams a tangible artefact to align around and bringing clients into the conversation much earlier. The speed matters: it accelerates feedback cycles, reduces ambiguity, and helps shape decisions before time and resources are heavily invested.

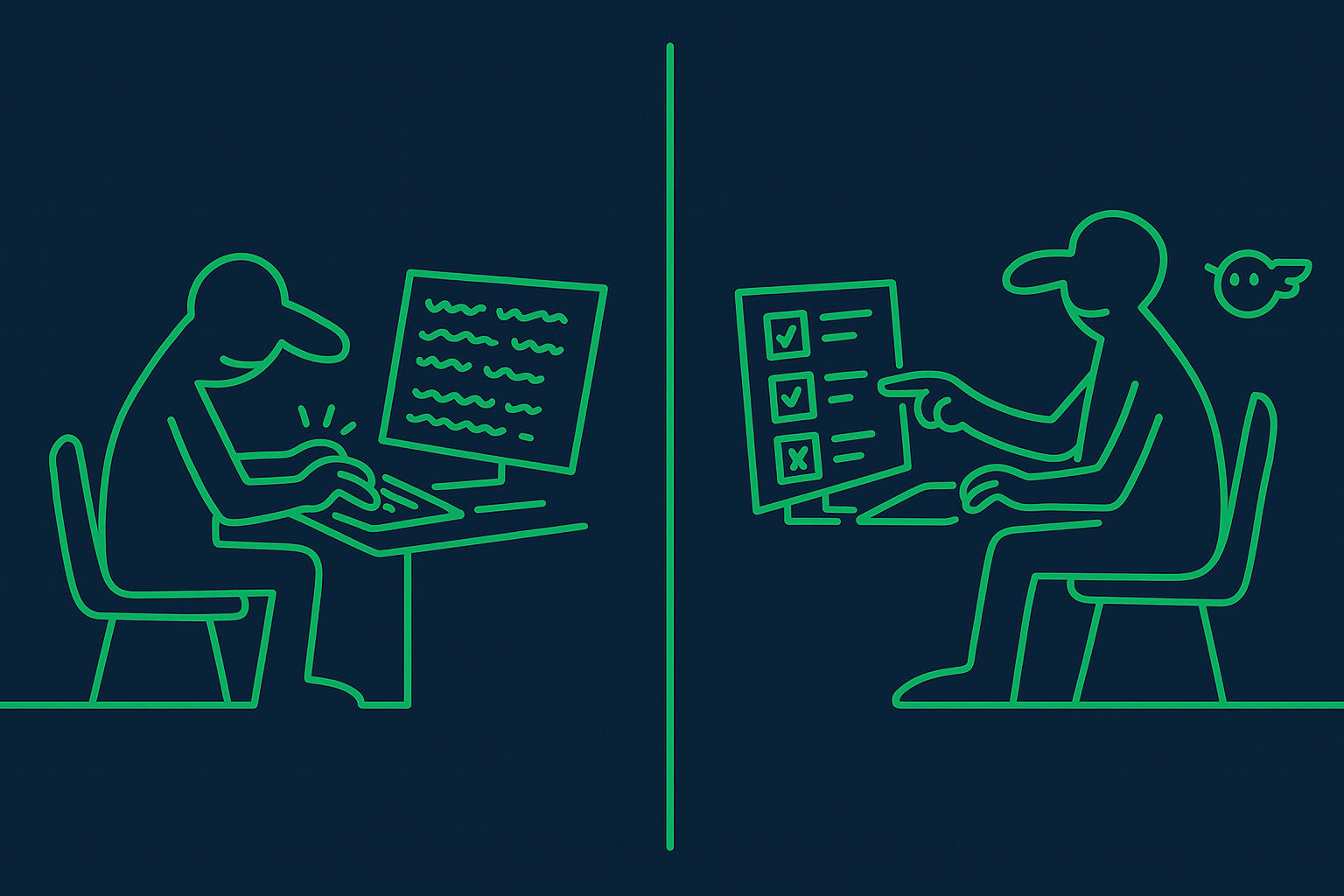

At the same time, those same colleagues also acknowledge the limitations. Designers find the outputs inconsistent and visually unpolished. Engineers see the generated code as messy and fragile, not the sort of foundation you would want to maintain over the long term. The result is a pattern that many described as a “dual lane”: one track for rapid prototyping and alignment, another for enterprise-grade production using tools such as Cursor, IntelliJ, Junie, Copilot, or Windsurf. Moving from one track to the other often means rewriting or significantly hardening what was created in the first phase.

In the tractor metaphor, these lanes are like the difference between ploughing a field quickly to test the soil versus preparing it properly for a full season’s harvest. Both are useful, but they serve different purposes, and the tension between them is real.

The real challenge isn’t building fast or building strong, but finding ways to turn quick scaffolds into lasting structures

The open question, and one we are actively grappling with, is whether this dual-lane setup should remain the norm. There is a case for it: rapid prototypes are useful precisely because they are disposable, and production code deserves its own discipline. Yet there is also a strong incentive to reuse more of what is created upfront, which would mean developing better practices during the vibe-coding phase so that prototypes are not only quick demonstrations but also viable stepping stones toward production. Whether the answer lies in raising the quality of prototype outputs or in streamlining the hardening bridge is not yet clear. What is clear is that this tension will define much of the practical evolution of GenAI in software development.

Another major theme is the use of AI as a knowledge partner, sometimes described as a “secondary brain.” We are often setting up project-specific GPTs, curated notebooks, and GPT-powered Confluence or Figma hubs. The aim is straightforward: cut down repetitive Q&A, speed up onboarding, and make sure critical context is always at hand.

The benefits are easy to see. New joiners can get up to speed more quickly. Team leaders avoid answering the same questions over and over again. Instead of digging through multiple tools and documents, teams can query a curated knowledge base and receive a coherent answer.

But the friction points are just as evident. Knowledge drifts quickly, and without deliberate maintenance these systems lose accuracy. The tool stack itself is not yet stable: different people gravitate to different environments, and each person is left to configure context on their own. This leads to duplication of effort and inconsistencies across teams.

As we move past experimentation and converge on more stable workflows, we can expect this to become less of an issue. Hallucinations still occur, especially in longer or more ambiguous tasks, and concerns remain about security when connecting AI directly to codebases or sensitive environments. However there is clear evidence of progress and a clear path ahead.

All of this points to an emerging discipline some have started to call context engineering. The name is tongue-in-cheek, just as “prompt engineering” was mocked for not being real engineering, but the underlying need is real. Where prompt design was about shaping a single request, context engineering extends the scope: how we structure and maintain knowledge so that prompts are always supplied with curated, relevant information.

““The problem is, you know, keeping this knowledge base up to date and it's like kind of hard… how to create this knowledge base so it's updated automatically and you can just query it whenever you need.” – Andrej, Solutions Architect

In the earlier article we spoke of professionals co-piloting the tractor. Context engineering is the work of building the cockpit: the dials, maps, and gauges that make sure the AI model has the right information to be useful. It will likely become as critical to software development as version control or testing practices.

Across the interviews, we were told of remarkable productivity gains. Depending on the task, the acceleration was described as three to five times faster, with some edge cases reaching as high as ten. The clearest benefits came in areas such as scaffolding new projects, generating boilerplate, writing tests, or picking up an unfamiliar language or framework.

One colleague described compressing weeks of Kotlin learning into days. Another highlighted an internal hackathon as the turning point, where work that would normally require two days was delivered in a few hours. Several spoke of situations where “80–90% of a new build” was generated correctly in the first draft, requiring only adjustments and validation.

It is important to note that these individual productivity gains do not translate directly into project timelines shrinking at the same rate. They shorten parts of the process, but overall velocity is constrained by other factors such as the need for human review, discussions, and integration. One useful way to understand this is by distinguishing between touch time and lead time. Touch time is the period when someone is actively working on a task, think reviewing code or drafting a feature. This might only take a couple of days. Lead time, on the other hand, includes everything from the moment the task is created until it is completed, which can stretch into weeks because of queues, dependencies, or waiting for other resources. GenAI often reduces the touch time, but unless we address the bottlenecks that create long lead times, overall delivery speed does not improve as dramatically.

This creates two possible paths: we can either use the saved time to work on more tasks in parallel, or we can focus on removing the roadblocks that slow down individual tasks. Parallelism can be positive, giving professionals the chance to contribute to more diverse challenges. But it also brings the challenge of task and context switching, which can be draining, as humans are simply not wired to juggle multiple threads of attention efficiently.

What we can say with confidence is that GenAI is already raising the quality of outputs within the same timeframe. The open question is not whether there are benefits — those are clear — but how these benefits will scale. Will they allow us to complete more in parallel, or will they enable us to rethink processes so that lead times shrink as well? That is the broader question, and one that will only be answered as new ways of working continue to mature.

“I honestly think I’m at least three to five times faster.” – Engineer sentiment (across multiple interviews).

Alongside productivity, roles are clearly evolving. Fewer colleagues think of themselves as “writing code” in the traditional sense. More see their value in reviewing, curating, steering, and integrating what the AI produces. The keyboard is still part of the process, but it is less about producing every line and more about making sure outputs are correct, secure, and aligned with broader goals.

Developers are shifting from line-by-line coding to guiding and validating AI outputs.

This evolution will also affect how we think about hiring. It does not mean that juniors have no place, but flexibility and the ability to move across stacks have become superpowers. People who are willing to adapt quickly and dive into multiple technologies are better positioned to harness AI effectively.

As we wrote in the tractor piece: once the genie is out of the bottle, the skill is not to bottle it back up, but to learn how to guide it. That same shift is visible here — the engineer’s role is less about brute force, more about steering, validating, and integrating.

Overall, the shift does not diminish the importance of colleagues across functions; if anything, it raises the bar. Critical thinking, cross-disciplinary awareness, and the ability to guide and refine AI outputs are becoming baseline expectations. What matters is not only technical fluency, but also the judgment to know when to accept, when to challenge, and when to intervene directly.

“The important thing with all these tools is that you have to give it context, precise context or guidelines. Otherwise it gets lost.” – Cristiano, Solutions Architect

A final theme is how adoption happens in practice. A culture that allows for experimentation is essential, because the tooling is still evolving very fast. Hackathons and internal events, combined with opportunities to explore AI whenever projects offer the right context, play a key role and will need to continue. Running projects where AI itself is the product also helps organisations build skills and understanding.

At the same time, even companies focused on more standard digital solutions (or where software is just a supporting product) should keep experimenting with GenAI tooling. We literally had engineers say they would “cry” if for any reason GenAI became unavailable in the future. It is hard to find someone who has tried it and not seen a use for it, and being a machine learning specialist is not a prerequisite to benefit.

While experimentation must continue, it is also time to start stabilising workflows where possible to avoid duplication of work and confusion. This now feels increasingly achievable, as tooling evolves to allow closer code control and better integration of knowledge bases. It remains a work in progress, but we can expect this to be the next focus for many change management efforts, both for us and the clients we serve.

“I was skeptical… until the hackathon forced me to use it. I realized what usually took two days could be done in a few hours.” – Mattia, DevOps Engineer

These observations are not abstract. They come from what we see at Enrian when we design digital solutions end‑to‑end, with business and technology considered together rather than in isolation. That way of working naturally shapes how we read the signals from our survey: the things we notice, and the things we think matter, come from sitting alongside clients and focusing on solutions that aim to make a real business difference on top of a solid technical approach.

In that light, our findings point to a field that is maturing, but still very much in motion:

GenAI is not simply another tool to add to the stack. It is reshaping how we work, the skills we rely on, and the workflows we follow. The opportunity is to turn scattered experiments into disciplined practice, while keeping sight of the fundamentals: quality, security, and human judgment.

The story is still being written, but the direction is clear. This survey is just one step in understanding what that future looks like. In the coming months, we will share more perspectives, including how GenAI is shaping products and architectures as well as best practices. The field is evolving quickly, and so are we. Stay tuned.